While the move from Apple is ambitious in protecting children, some privacy experts have raised security concerns over the same by stating that Apple is normalizing the fact that it is safe to build systems that scan users’ phones for prohibited content. As per the announcement, Apple will be introducing three new features to its devices with the iOS 15, iPadOS 15, macOS Monterey, and watchOS 8 future updates in order to help limit the spread of online sexually explicit content that involves a child. The update includes new tools and resources in the Messages app and the CSAM detection technology. Follow through the article for more detailed information on how these features work and whether you need to be concerned or not.

Safer ‘Messages’ App

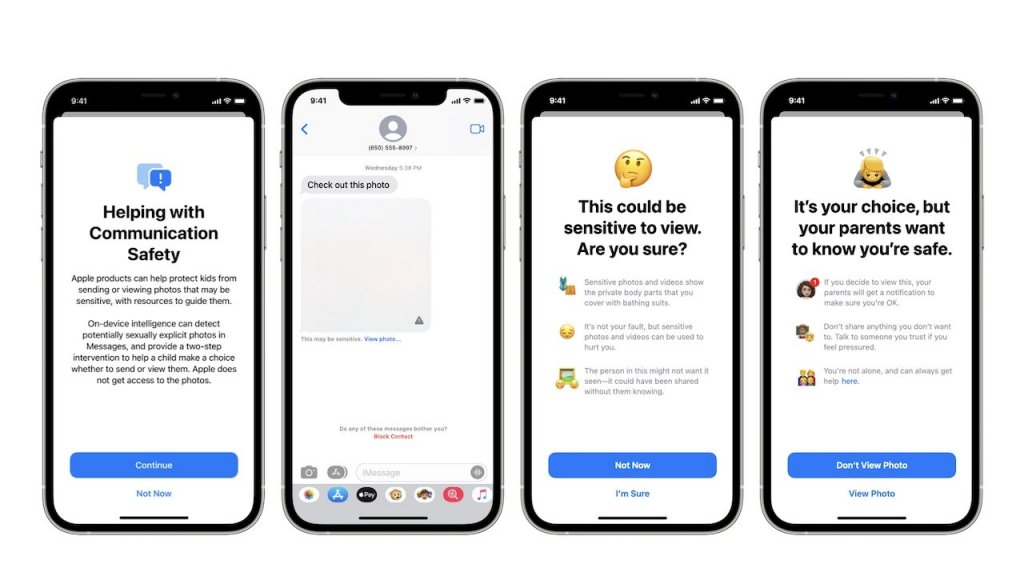

In a move to empower and ensure the safety of its child users’ lives, Apple is planning to introduce new communication tools that will actively allow parents to help their children navigate the internet in a safe manner. Also, read Every Rumor on Upcoming iPhone Touch ID | Will the iPhone 13 Feature In-Screen Scanner? For this, the Messages app will be getting a feature-loaded update which will bring out new tools accompanied with on-device machine learning. While receiving or sending sexually explicit photos, this combination of machine learning technology with new tools will put out a warning to children and their parents.

How Does it Work?

Suppose the Messages app receives such type of sensitive content. In that case, the on-device machine learning will analyze those image attachments in order to determine if they are sexually explicit. On the determination of the image’s content to be sensitive in nature, the photo will get blurred out with a user intent warning. In addition, the child will be presented with helpful resources and reassurance if they do not wish to view the photo. There is also an additional precaution that lets the child know that if they do view this photo, a message will be sent out to their parents as a provision to make sure they’re safe. The same protection is offered the other way around. Let’s say, a child tries to forward some sexually explicit content in which case he/she will first be warned about the same by the protection system. However, even after the warning, if the child still chooses to send the photo(s), their parents will be notified with a message. This is a great feature that loops parents in by keeping them informed about their child’s harmful and unsafe online browsing. The feature is so designed that private communications will be kept unreadable from Apple. Though Apple claims and assures that it won’t get access to the messages, various security experts have accused Apple and its new system to be an infrastructure for surveillance.

CSAM Detection Method

To address the concern of the spread of Child Sexual Abuse Material (CSAM) over the internet, Apple will soon be phasing out new technology in iOS and iPadOS for the same. With this new technology, Apple can detect matches of existing CSAM cases. Upon a human review of these potential matches, Apple holds the power to report such instances to the National Center for Missing and Exploited Children (NCMEC). The NCMEC, in turn, works with the US law enforcement agencies who will further investigate the matter on their level. Also, read Realme Flash and its Innovative Magnetic Wireless Technology of 2021

How Does it Work?

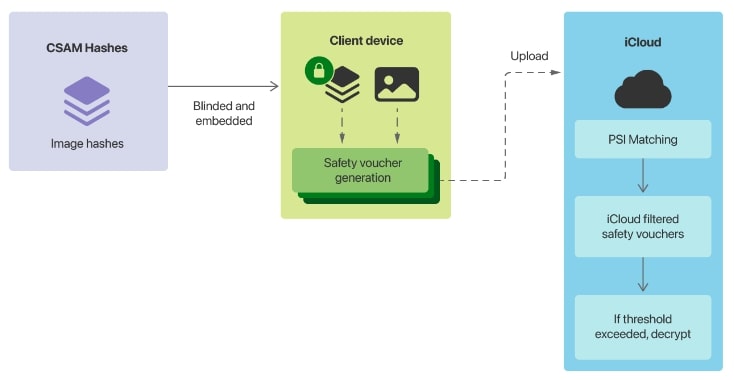

As stated by Apple in the announcement, again and again, their new technology is designed with keeping user privacy in mind. The same applies to its CSAM detection method that doesn’t scan the images in the cloud. Instead, it uses a system in which the image is matched against a known CSAM database. This known database includes CSAM image hashes that are provided by child safety organizations like NCMEC. These hashes are then transformed into an encoded set by Apple and securely stored on its users’ devices. The CSAM method uses a cryptographic technology in which an on-device image matching process is carried out well before the image is stored in the iCloud Photos. This technology is called private set intersection which determines if there is a match and privately stores the result data in an encrypted safety voucher. This encoded voucher along with the image is then uploaded to iCloud Photos without actually revealing the result. The new system also incorporates something called ‘Threshold Secret Sharing’ that works in collaboration with cryptographic technology. The Threshold technology simply correlates to a limit of known CSAM sensitive content. When this threshold is exceeded, Apple can decipher the previously encrypted vouchers corresponding to the matching CSAM images. After manually reviewing each image case, Apple can then decide to report the case to NCMEC and disable that user account, if need be. However, if the user feels like they have done nothing wrong and that their account has been mistakenly flagged, they get the option to file an appeal to have their account restored. Check-Out AirPods Pro vs Nothing Ear 1 | Which One Should You Buy?

New Resourceful ‘Siri and Search‘

Apple has also taken some steps in updating the Siri and Search feature on its iOS devices. The new update for Search and Siri will feature guidance resources to keep children and parents safe on the internet.

How Does it Work?

As part of Apple’s expanding guidance move, certain helpful resources will get featured in Siri and Search that might be useful in unsafe situations while online. Suppose if a user asks Siri how to report instances of CSAM or child exploitation, the newly updated guidance will point the user to resources for where and how to file a report. In addition, the update will let these features intervene in user-performed searches on queries related to CSAM by explaining and enlightening them that interest in such topics is both harmful and problematic. Furthermore, those users will be offered more guidance and help on this issue straight from partner-shared resources. Wrapping Up This brings us to the end of our article on Apple’s new scanning technology. With the innovative technology to provide and pass on actionable information to NCMEC and law enforcement agencies in regards to child abuse material, Apple has significantly improved such resources while claiming to keep user privacy as its focus factor. We hope that you found the article informative and that it gave you some insight into the upcoming iOS updates. If you liked the content, share it with your friends and let us know your thoughts on Apple’s new move. For more such interesting content on Technology, Lifestyle, and Entertainment, keep visiting Path of Ex – Your Spot For Trending Stuff! Feature Image Credit: Mint

Δ